The speed of decision making is the essence of good governance.

—Piyush Goyal, Minister of Railways and Coal, Government of India

Adapting Governance Practices to Support Agility and Lean Flow of Value

This is article nine in the SAFe for Government (S4G) series. Click here to view the S4G home page.

Governments are some of the world’s largest purchasers of technology and technological solutions intended to address some aspect of the health and welfare of the citizens they represent. They also have the mandate to spend taxpayer money wisely, to avoid waste, and to actively manage the risk of failure of a program or a deployed solution. Governance over the amount spent and the efficacy of the solutions they procure is mandatory.

For many reasons, government programs must contend with multiple layers of oversight throughout the development lifecycle. In some cases, the guidelines are internal to the agency that owns the program, but in many instances, programs must adhere to policies set by other agencies or by law. Traditionally, the phase-gate or waterfall method was used to implement oversight. It’s not surprising, then, that the stakeholders responsible for governance are often concerned when changes are proposed to support the continuous value flow paradigm represented by Lean-Agile and SAFe.

This final installment of the SAFe for Government series addresses the challenge of how current governance practices perpetuate legacy waterfall processes and thereby do not necessarily accomplish their intended purpose of managing risk and spending. These legacy practices often produce the opposite results:

- Introduce unnecessary waste and delays

- Increase program risk

- Reduce overall economic outcomes for the taxpayer

The following sections provide recommendations on ensuring sound governance continues even after agencies adopt a continuous delivery lifecycle with SAFe.

Details

In this context, contractors and solution builders who simply want to deliver valuable capabilities as quickly as possible often view governance as a necessary evil. Much of this perception is driven by traditional oversight processes that are seen as obsolete, redundant, and documentation-heavy, and only serve to delay the release of critical functionality. Interactions between program practitioners and “the inspectors” can be strained if not openly combative, with each side blaming the other for defects and delays.

To be fair, governance is not the natural enemy of the lean flow of value in technology development. In fact, governance plays a vital role in many high-assurance systems where the economic and human cost of failure is unacceptable. It is merely a framework for decision-making and oversight to ensure that programs achieve the desired mission outcomes. The dimensions of effective governance include:

- Objectives – did the program deliver the intended capabilities

- Constraints – did the program deliver within time, cost, scope, and quality guardrails

- Compliance – did the program meet statutory, regulatory, and best practice guidelines

What’s needed is a re-evaluation of the processes used to exercise these oversight responsibilities while respecting the value governance provides to the ART and the various stakeholders of the resulting solution.

The following sections provide perspectives on how governance can evolve into a supportive and enabling process for technology programs using Lean-Agile, DevOps, and SAFe.

Evolving to Lean-Agile Governance

As with the other topics addressed in this series—such as budgeting, forecasting, contracting, etc.—the first step on the journey to adopting governance practices to support Lean-Agile is to recognize the contrasting patterns between traditional oversight approaches and the ways these responsibilities are carried out in a Lean-Agile environment. Table 1 summarizes the differences.

Lean-Agile methods do not argue that accountability for program planning and execution within established boundaries is unnecessary. On the contrary, the values, principles, and practices promoted by SAFe are frequently viewed as more rigorous than previous approaches due to the focus on transparency and evaluation of progress through objective evidence (i.e., demonstration of working systems as the primary measure of progress and performance). Governance remains important with Lean-Agile. As seen in the right column, what has to change is how the oversight responsibilities are carried out.

The comparisons in Table 1 can be summarized by the need to adapt and recognize that technology programs can have many unknowns in the early stages of development. This often prohibits precise estimates at the detail level for requirements, design, tasks, etc. Traditional practices assume that all aspects of system development can be known up-front and that success can be achieved as long as variance from initial plans is tightly controlled. However, evidence from decades of failed projects has proven that an iterative and incremental model that permits discretion within broader time, cost, and scope boundaries is more appropriate when the solution requires innovation and exploration into uncharted territory.

Some agencies in the U.S. government have recognized the need to adapt internal oversight regulations to account for the new way of working described by SAFe. They have modified their processes to include alternative models for providing governance using Lean-Agile programs. One of the most progressive case studies in the U.S. is the Department of Homeland Security (DHS). This agency has modified its guidance to assume Lean-Agile as the default planning and execution model for technology development. DHS’s governance and oversight practices have evolved to align with this new way of working. (The governance document for DHS can be downloaded from the SAFe for Government resources page.)

The U.S. Government Accountability Office (GAO), the agency responsible for auditing federal programs, has also created a new guide for its auditors to use when evaluating programs that employ a Lean-Agile process model. [1]

Streamline Execution Reviews to PI Cadence

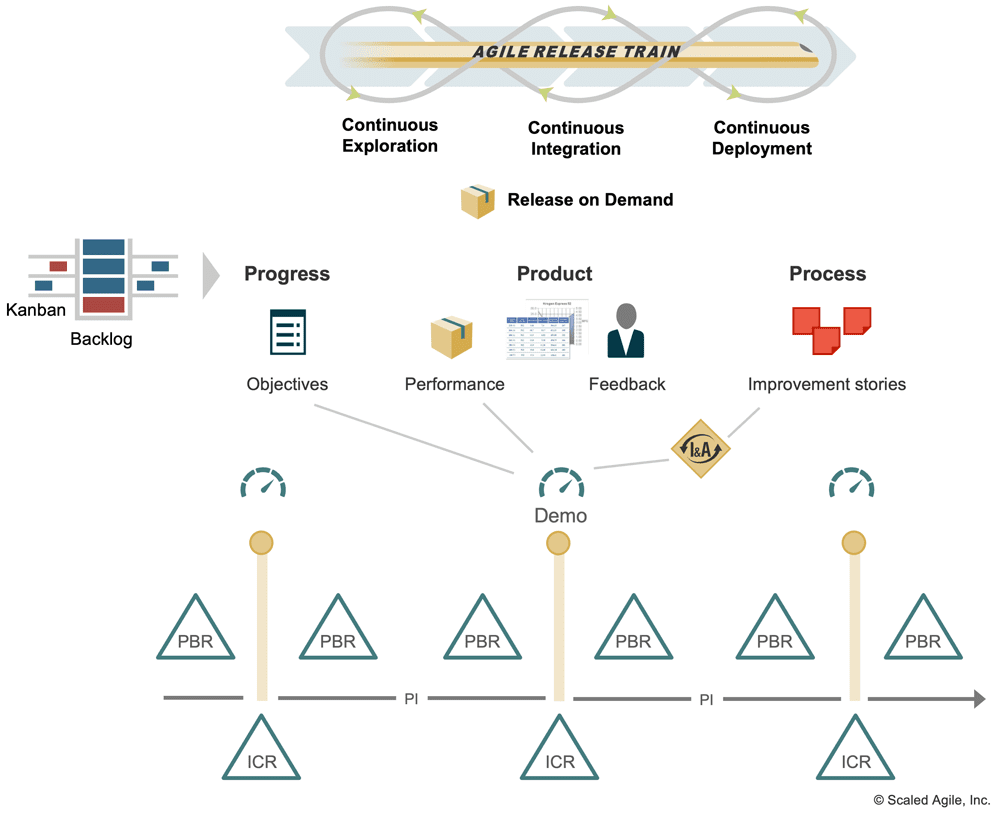

One of the success patterns we have observed in government programs using SAFe is streamlining the review process from a long list of assessments from different groups and committees to a consolidated review schedule that aligns with the PI cadence and events. One recommended model condenses all reviews into two types of milestones: the Incremental Capability Review (ICR) and the ART Backlog Review (PBR), as illustrated in Figure 1.

The ICR lines up with the Inspect & Adapt (I&A) and PI Planning events that occur in SAFe on the PI boundaries. I&A includes a comprehensive demonstration of system development progress during the previous PI. Since the features included in the PI for development are either working or not, the demo provides objective evidence of real progress. By providing key proof points that governance teams can closely watch, quality and compliance factors can also be demonstrated. I&A also includes a performance reporting item in the standard agenda and can include the required metrics to demonstrate the program is operating within various governance guidelines.

The PBR aligns to the frequent backlog refinement sessions (updating work remaining to be done) that occur in between the PI boundaries. This event is where program stakeholders make decisions regarding the scope and priority of the development backlog. Governance personnel focused on scope management can participate in each PBR for direct, real-time insight into what the program is delivering and the trade-off decisions that will inevitably need to be made as new information emerges.

Although many programmatic milestone reviews can be consolidated into the ICR and PBR, other milestone events will remain unchanged. For example, the test flight process for new aircraft can only be conducted once the plane is ready to fly. However, with frequent and incremental checks throughout the development process, these final milestones will be more predictable, and the systems themselves will contain fewer defects.

Embrace the Inspectors

One common question is how to change governance models that are often entrenched in waterfall practices when the ownership of those policies resides outside the program’s span of control. Clearly, such changes require patience and persistence. The current guidelines have evolved over many years. Adapting them to the new way of working will require a lot of consensus-building.

Change agents should start with a mindset of respecting and valuing the governance officials’ role. Discussions of possible changes to policy and process should be built on a foundation of open, positive, and proactive relationships with the people with oversight responsibilities. Start from a position of “do no harm,” acknowledging that any change to governance processes must still accomplish the original intent and enable governance teams to execute their responsibilities. New processes should be built collaboratively so that all parties have ownership of the new approach. In his book Turn the Ship Around, David Marquet describes this as “embrace the inspectors.” [2] It recognizes that governance officials have intimate knowledge of the rules and have observed both good and bad implementation patterns across many programs. As a result, they represent a wealth of information and a voice that should be heard as new process models are formed.

Adopt Agile Earned Value Management (EVM) as Required

A common governance concern for government programs adopting Agile is the use of Earned Value Management (EVM) described in the Project Management Institute’s (PMI) Project Management Body of Knowledge (PMBOK) to assess cost and schedule performance. While EVM is not a common practice globally for managing Agile development, some government agencies (particularly the Department of Defense in the U.S.) are currently mandated by law to use EVM on large programs. In these instances, whether EVM is useful or not is moot; program managers using SAFe in this context must meet EVM reporting requirements. Fortunately, government experts have already done much of the work to provide guidance for using EVM with Agile lifecycle models.

For programs using SAFe, the best practice to map backlog items to EVM equivalents at various levels is reflected in Figure 2 below.

In this model, the top-level control account in EVM can contain and manage one or more Epics in SAFe. The Features in each epic map to planning packages when they represent future work on the Roadmap and evolve into work packages when detailed plans are created during PI Planning. Story details for each feature (scope, time, effort) provide the quantifiable backup data EVM requires. Since each feature planned represents X% of the total capacity of the ART for the PI, labor costs for the PI are known (material and other non-labor costs are a different matter). This makes the planned cost per feature easy to calculate. At the end of the PI, we also know the actual percentage of capacity of the train consumed to build each feature, so we have all the data needed to do the planned versus actual EVM measures.

While EVM ratios can be populated using this technique, they don’t have the same utility in SAFe because the system is built in small batches and refactored or ‘rebaselined’ at every PI boundary. Further, unlike a traditional project plan with a static scope, Agile plans for change. After all, that’s what creates agility. The net result is that the need for EVM in SAFe is not as great as in traditional waterfall projects. There are better, faster, and less complicated ways to get the same or more beneficial results when following Lean-Agile practices. But when it is mandated, SAFe practices can be adapted to provide the data required to meet EVM reporting requirements (for more information on mapping using EVM on Agile programs, refer to the Agile and Earned Value Management: A Program Manager’s Desk Guide [3]).

Learn More

[1] GAO Agile Assessment Guide, Sep 2020. https://www.gao.gov/assets/710/709711.pdf [2] Marquet, L. David. Turn the Ship Around!: A True Story of Turning Followers Into Leaders. Portfolio, 2012. [3] Agile and Earned Value Management: A Program Manager’s Desk Guide. https://www.acq.osd.mil/asda/ae/ada/ipm/docs/AAP%20Agile%20and%20EVM%20PM%20Desk%20Guide%20Update%20Approved%20for%20Nov%202020_FINAL.pdf

Last update: 15 November 2022